British Red Cross raised £2.35 million in three hours during One Love Manchester, watched live by 22.6 million people. How did the charity’s donations platform cope with the massively increased influx of traffic? Chris Walker, CTO at digital agency Friday, which built the platform, explains:

British Red Cross raised £2.35 million in three hours during One Love Manchester, watched live by 22.6 million people. How did the charity’s donations platform cope with the massively increased influx of traffic? Chris Walker, CTO at digital agency Friday, which built the platform, explains:

Friday’s involvement in One Love began at 1.30pm, Thursday 1 June, when the British Red Cross (BRC) team told us they were partnering with the BBC and One Love Manchester, to support the concert scheduled for that coming Sunday evening.

Friday had soft launched the new donations platform for BRC a few weeks previously. What the team now wanted to know was what would it take for the platform to receive donations from across the world at a huge, live public event.

Could we, in 3 days, scale a platform specified to cope with the expected peaks and troughs of BRC’s UK-based campaigns, and make it capable of handling huge waves of traffic over an extended time from the 43 countries to which the concert would be broadcast?

Could we, in 3 days, scale a platform specified to cope with the expected peaks and troughs of BRC’s UK-based campaigns, and make it capable of handling huge waves of traffic over an extended time from the 43 countries to which the concert would be broadcast?

Yes, we could – and did. The donations platform was architected precisely so it could scale.

Opportunity NOC

We assembled a team in under an hour and began building a network operations centre (NOC) in our London office to support the donations platform in the run up to One Love, during the event, and afterwards.

The platform had already been tested to exceed the peaks predicted for BRC’s UK campaigns, but now we would need to test its capacity to an order of magnitude higher.

The platform had already been tested to exceed the peaks predicted for BRC’s UK campaigns, but now we would need to test its capacity to an order of magnitude higher.

The team’s job was to operationalise the NOC: set up roles and responsibilities, establish communications channels, and model what-if scenarios, such as: technical failure, payment gateway failure, operational failure, and a critical incident in or around the event.

We extended real-time analytics into every dimension and level of the platform, such as load performance, users, payments, response time and error rates.

We had to be ready for crazy response rates, so testing was pushed to exceed by a factor of 3 the traffic volume expected from the event. The testing was finished by 7pm Friday evening so we took Saturday off, reconvening at the NOC at 4pm on Sunday. One Love started at 7pm.

Failure Anticipated

Our Ops team monitored performance, error rates, functional performance and load times; The Sitecore lead engineers who developed the platform worked alongside in case it needed changing on the fly; and a release management team was on hand to provide lightweight QA to ensure changes – if required – could be deployed seamlessly.

We weren’t disappointed. Ten minutes into the concert a downstream service failed; overloaded by the traffic we were passing to it.

We weren’t disappointed. Ten minutes into the concert a downstream service failed; overloaded by the traffic we were passing to it.

We immediately invoked one of the contingency scenarios and introduced platform changes. A patch was identified, modified and released in 20min with no interruption to the donations platform, invisible to the users.

Scaling The Peaks

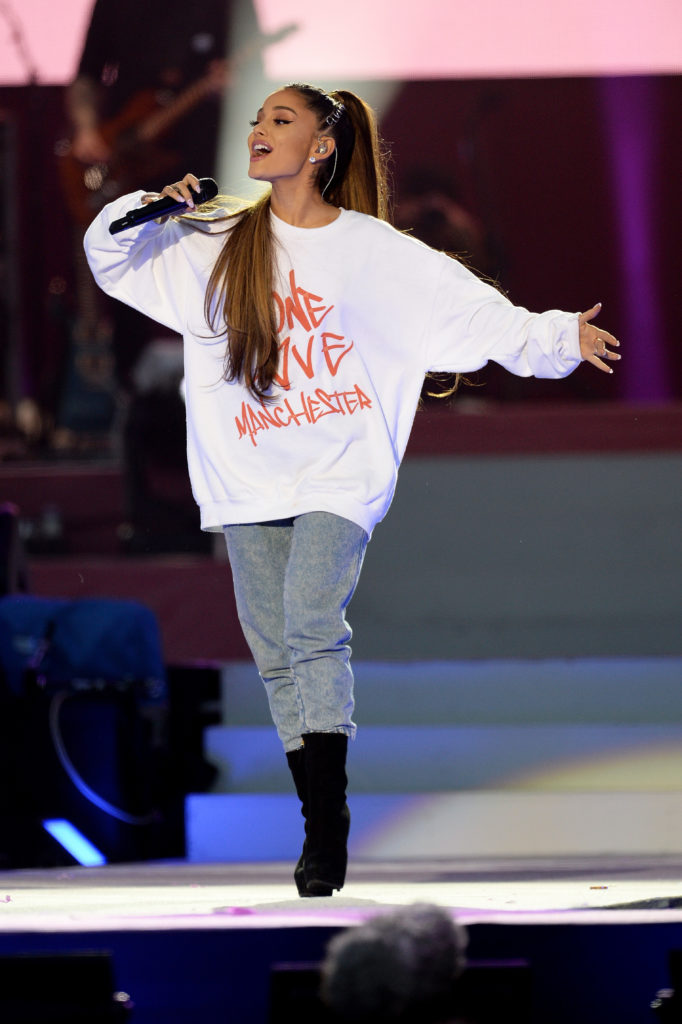

Traffic spiked as predicted around specific calls to action or acts on stage, such as when Imogen Heap and then Justin Bieber appeared. The URL redcross.org.uk/love was broadcast on massive screens and redirected to the donations platform, sending already high traffic screaming upwards.

At peak we processed 398,000 requests a minute. Users saw an average response time of 0.1s (minimum 0.02s, maximum 1.19s) whether they were in the UK or the other side of the world. Bandwidth peaked at 375MB/minute, and we were executing over 1,300 write operations per second into the donations database – with the service bus handling over one million messages in the peak hour of viewing.

At peak we processed 398,000 requests a minute. Users saw an average response time of 0.1s (minimum 0.02s, maximum 1.19s) whether they were in the UK or the other side of the world. Bandwidth peaked at 375MB/minute, and we were executing over 1,300 write operations per second into the donations database – with the service bus handling over one million messages in the peak hour of viewing.

During the One Love Manchester concert, nearly 700,000 users hit BRC’s site, a concurrent maximum of 33,000 users making 823 donations a minute – higher than register-to-vote users a few hours before the 22 May deadline.

Nevertheless, our servers never exceeded 50% capacity at any point. During the weekend the website handled more than 1.8 million users, compared to 24,000 the previous weekend.

55% of users were from the USA, 35% from the UK; and as expected, the vast majority (94%) were mobile users.

One Love ended at 10pm and by 11.30pm traffic spikes abated, so we left the platform running unattended. Nevertheless, traffic remained high: 5,000 people were on BRC’s site 8.30am the next day. Usually it’s about 100.

One Love ended at 10pm and by 11.30pm traffic spikes abated, so we left the platform running unattended. Nevertheless, traffic remained high: 5,000 people were on BRC’s site 8.30am the next day. Usually it’s about 100.

Above & Beyond

We architected the donations platform to scale up way beyond the peaks of traffic usually expected from BRC’s campaigns. You have to do this when the purpose of the project is to handle, at short notice, things outside your control, but where you are a critical part of the response.

When it comes to monitoring strategy, web analytics aren’t good enough. You need low-level real-time insight into every part of the platform, and the ability to aggregate up to multiple dimensions of data that can be interpreted and acted on in real time by an empowered and capable team.

We’ve proved that we can cope with pretty much any requirement for business-critical customer-facing platforms needing transaction processing, high-volume reporting or real-world event or incident support. For example: fundraising, public events, emergency response, or travel disruption.

We’ve proved that we can cope with pretty much any requirement for business-critical customer-facing platforms needing transaction processing, high-volume reporting or real-world event or incident support. For example: fundraising, public events, emergency response, or travel disruption.

Using Amazon Web Services, the costs for scaling occur only once you scale up, and are gone as soon as you scale down. If you architect the solution correctly – to cope with crazy – then you don’t need idle spare capacity wasting money.

It’s not just technology; it’s the people and their passion for doing it right; it’s about lightweight but robust processes; and it’s about giving people the authority to act in real time. It’s not rocket science; it’s attitudinal.

Article by Chris Walker, CTO & COO, WeAreFriday

About Chris:

About Chris:

Chris is an award winning digital technologist – he has been a digital technical authority across high profile advertising and pure-play digital agencies for 12 years – designing, coding and deploying systems and platforms on behalf of clients like HSBC, Comic Relief, Nuffield Health, AgeUK, British Airways and Volkswagen, before co-founding Friday (We Are Friday Ltd) in 2009.

Since 2009, Chris has led the creation and growth of Friday’s engineering team, which now has a set of in-house capabilities with a depth normally only associated with a traditional “system integrator”. Chris works with Friday’s clients to understand how technology delivery and operational controls can help deliver a better customer experience – Chris believes passionately in the power of technology as an enabler whilst insisting it remains invisible to the end user.

Source: WeAreFriday

You must be logged in to post a comment Login